Photofield Part 1: Origins: Gallery Begins: The Beginning

Everyone and their dog have their own homegrown photo gallery software nowadays. This is the story of one of those: Photofield. What it is, why it exists, why it’s unique, and maybe some cool tech stuff.

Contents

- What is it?

- Wait… why? We already have X!

- The annoying problem

- The solution design process

- 10 steps back first

- I’ve seen this before

- I’ve not seen this before

- In conclusion

What is it?

A non-invasive local photo viewer with a focus on speed and simplicity. — Me, photofield.dev

Wait… why? We already have X!

Don’t you worry about X, let me worry about blank! In any case, I’m sorry, but I’m going to have to send you back in time to the first Corona lockdowns in 2020, when X did not exist / I didn’t know about it / I didn’t like it.

So there I am, amidst a global pandemic with an unprecedented global response, trying to conserve some energy after narrowly avoiding the whole sourdough thing. I have to focus it somewhere. Coincidentally, I also have an annoying problem to solve.

The annoying problem

Actually, it’s two problems:

- I have a lot of personal photos, relatively speaking. Like hundreds of thousands.

- Synology Moments is slow.

Let me explain. So at this point I’ve already been bitten by the self-hosting bug. This means that while Google Photos is pretty great, I cannot quite get myself to give up my entire life over to a corporation just yet1. This explains why I have a NAS with Synology Moments2, which is genuinely quite an impressive offering, especially since it’s running fully locally.

But the problem is that it starts breaking down if you have a personal photo collection of >300k photos. Why? It involves a rather unfortunate SQL query that means loading the timeline takes minutes, minutes! But that’s a story3 for another time…

The solution design process

Of course, a reasonable person would say one of the following things, in order of from most reasonable to raving madman:

- What am I doing? I should just dump everything on Google Photos

- It’s not a big deal, I can wait a bit for the photos to load

- I should delete some photos to make it go faster and save space

- I should get a faster NAS so that the timeline loads faster

- Maybe a software update will fix it, let’s see!

- I should report it as a bug, maybe they will take a look

- I should evaluate other software

- …

- …

- …

- I should hack Moments to make it go faster3

- …

- …

- I’M GONNA MAKE MY OWN PHOTO GALLERY AND IT’S GOING TO BE FAST AND GOOD AND SO ON BECAUSE HOW HARD CAN THAT BE, RIGHT???

Of course I immediately jump over the first 10 points, take a brief stop at #11 and then start dumping all of my cabin fever energy into #14 like a normal person.

10 steps back first

So the more I pace, the more I start “first principling” photo galleries. What is really the problem being solved here?

It’s just a bunch of images in a row

Regardless of the implementation, at the end of the day, a photo gallery is really a bunch of image rectangles in some kind of an arrangement or grid. Everything else is either an optimization or extension.

So why is it hard?

Well, just dumping a bunch of <img> tags into a page can go a long way and there are many solutions that do just this. But as with everything, the more you want it to ✨scale✨, the more it starts hating your soul and sacrifices have to be made.

You can only go so far dumping tens or hundreds of 10MB jpegs at the browser before your network / memory / cpu give up. That’s a lot of data and a lot of pixels!

Did you say BIG DATA?

No, no, stop, don’t go away. I don’t mean Big Data big data, but big data big data. Let’s say that we want to display a timeline of all of our 600k photos in a grid 4 photos wide on a monitor.

So, to see all of the photos at 100% zoom, you would need a monitor 4 meters wide and so tall that it would literally reach outer space. I don’t have that kind of money, so I’ll need to settle for reducing that somehow.

Reducing the pixel count

Clearly we cannot show all the photos at 100% zoom, so we need to either make them smaller or show fewer or both. But how many pixels are we talking about reducing?

So in total for our example collection of 600k photos we have 7,200,000,000,000 pixels in total. When you have enough digits to break word wrapping, you know you’re in trouble.

In contrast, a typical computer screen has a lot fewer pixels…

Like a lot a lot fewer: all the photos in our collection have 3 million times more pixels than we can display on a 1080p screen. Let’s, then, just… not?4

How not to render 7 terapixels

It’s a simple two-step process.

- Render the ~2 megapixels that you need

- Do not render the remaining ~7 terapixels

Ok, well maybe step 2 is not so simple. Now a completely reasonable and productive developer already has the answer to this conundrum.

I know, I’ll just generate thumbnails at a few predefined sizes and then serve the right size in the right situation. — Completely Reasonable and Productive Developer

Yes, yes, reasonable developer, but that’s only 3-steps-back thinking. You’re not seeing the big-big-big picture here! What are we trying to do again?

- Only process photos that are visible on screen, i.e. view culling

- Only process the pixels of photos that are visible on screen, i.e. resizing or downsampling

So if we want to show the gallery in e.g. a browser, we would ideally only be processing at most the number of pixels the browser window has.

But now it feels like we’re trying to write a game engine. Before we go there, I feel like I’ve seen something like this before. After staring at a wall for 5 minutes it hits me.

I’ve seen this before

It’s a map! A world map is a big detailed image dataset, a lot bigger even than what we have here. You would also need another Earth if you wanted to see all the images at once at 100% zoom.

Luckily for us, lots of smart people have mostly solved this problem over the last 2 decades. It’s like thumbnails, but in a grid! For example, we can use OpenLayers5 with debug view to show OpenStreetMap and its tile grid. Each tile is identified by its zoom level z and tile coordinates x and y at that zoom level.

What if we did that, but instead of a map of the world, we had a map of photos instead?

I’ve not seen this before

Let’s say that we virtually lay out a bunch of photos in a dense grid and let’s call that our “scene” that we want to render. This scene is the equivalent of the world map, except that instead of the landscape of Earth, we have a landscape, or dare I say, a field of photos — a photofield.

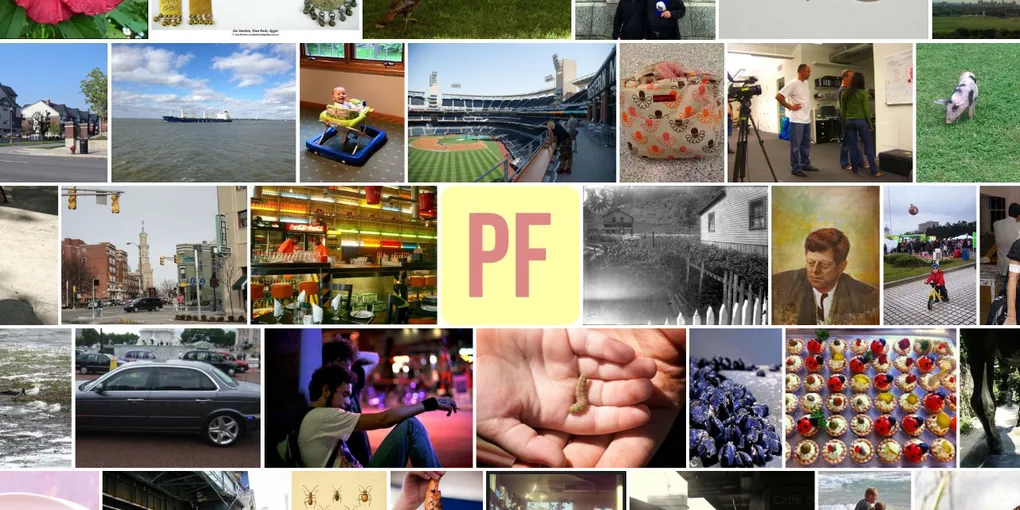

This means we can use lots of existing tooling like OpenLayers to render our scene at arbitrary resolution and camera view. Since we’re using tiled rendering, it also means we also only render the photos and pixels needed or at least approximately so. Using a subset of the open images dataset as an example, that would look a little something like the following.

How does this possibly make any sense?

Maybe it does, maybe it doesn’t. If you now have the following questions, you’d be quite reasonable and I’ll try to answer them.

Q: That’s dumb, you just made thumbnails, but worse

A: First of all, that’s not a question. Second of all, yes, maybe, but not really.

Q: Wouldn’t you have to change the scene every time you add a photo?

A: Yes.

Q: And every time you change the screen size or want to lay out the photos differently?

A: Yup.

Q: And for each device that’s viewing it separately?

A: Yeah.

Q: So again, thumbnails, but worse. It’ll take like thousands of times more space and you would need to pregenerate tiles for all the different combinations of screen size, collection, and regenerate every time anything changes. A terrible, terrible idea.

A: Whoah there, who said anything about pregenerating? You only need to generate.

Q: What?

A: Nobody is saying you need to save any of these tiles anywhere. You also don’t need to save the scenes anywhere as long as you can generate them reasonably quickly. Remember, if you can get away with not doing something expensive or annoying, don’t do it.

Q: Does that mean that you’d generate tiles and scenes on-the-fly?

A: Pretty much. Generate a scene when needed (call it a “layout” event), then render the tiles on-the-fly by loading the photos visible in a tile and resizing them to fit.

Q: Isn’t that going to be terribly slow?

A: Well, it’s certainly not as fast as serving static files. But CPUs and local storage are fast, while networks and browsers are slow, so it kinda works better than you’d think?

Q: This raises even more questions!!! How do you click on photos? What about timelines and scrolling? Erghhh…

A: Well I ain’t got the whole week, so those will have to wait. Meanwhile, try out the demo yourself.

In conclusion

The weird idea of treating photo galleries as if they were maps sort-of worked and I haven’t spotted a similar solution elsewhere. Check out photofield.dev for the features/docs/demo. The code is open source under MIT license at github.com/smilyorg/photofield.

Send me an email or toot if you have any ideas, comments, questions.

Footnotes

-

Thanks, Europe! ↩

-

Now Synology Photos, apparently. Maybe it has the same problem, maybe it doesn’t. I haven’t upgraded yet 🤷♂️ ↩

-

Ah yes, the story of trying to hack Moments. What a wonderfully terrible idea which I should have written about at the time. ↩ ↩2

-

Funnily enough, this is the best performance optimization principle to go by. Not doing the thing you think you need to do, but you actually don’t. ↩

-

Honorary mention goes to OpenSeadragon, which was used in the first prototypes. ↩